I’m indebted to George Monbiot for this article, and ‘Tolly’ as a nickname for Iain Tolhurst.

Many articles from people that I follow online pass through my ‘inbox’.

But there was something special about a recent article by George Monbiot that was published in the Guardian on December 5th and I have great pleasure in republishing it here, with George’s permission.

ooOOoo

Shaking It Up

Posted on 7th December 2025

A eureka moment in the pub could help transform our understanding of the ground beneath our feet.

By George Monbiot, published in the Guardian 5th December 2025

It felt like walking up a mountain during a temperature inversion. You struggle through fog so dense you can scarcely see where you’re going. Suddenly, you break through the top of the cloud, and the world is laid out before you. It was that rare and remarkable thing: a eureka moment.

For the past three years, I’d been struggling with a big and frustrating problem. In researching my book Regenesis, I’d been working closely with Iain Tolhurst (Tolly), a pioneering farmer who had pulled off something extraordinary. Almost everywhere, high-yield farming means major environmental harm, due to the amount of fertiliser, pesticides and (sometimes) irrigation water and deep ploughing required. Most farms with apparently small environmental impacts produce low yields. This, in reality, means high impacts, as more land is needed to produce a given amount of food. But Tolly has found the holy grail of agriculture: high and rising yields with minimal environmental harm.

He uses no fertiliser, no animal manure and no pesticides. His techniques, the result of decades of experiment and observation, appear to enrich the crucial relationships between crops and microbes in the soil, through which soil nutrients must pass. It seems that Tolly has, in effect, “trained” his soil bacteria to release nutrients when his crops require them (a process called mineralisation), and lock them up when his crops aren’t growing (immobilisation), ensuring they don’t leach from the soil.

So why the frustration? Well, Tolly has inspired many other growers to attempt the same techniques. Some have succeeded, with excellent results. Others have not. And no one can work out why. It’s likely to have something to do with soil properties. But what?

Not for the first time, I had stumbled into a knowledge gap so wide that humanity could fall through it. Soil is a fantastically complex biological structure, like a coral reef, built and sustained by the creatures that inhabit it. It supplies 99% of our calories. Yet we know less about it than any other identified ecosystem. It’s almost a black box.

Many brilliant scientists have devoted their lives to its study. But there are major barriers. Most soil properties cannot be seen without digging, and if you dig a hole, you damage the structures you’re trying to investigate. As a result, studying even basic properties is cumbersome, time-consuming and either very expensive or simply impossible at scale. To measure the volume of soil in a field, for example, you need to take hundreds of core samples. But as soil depths can vary greatly from one metre to the next, your figure relies on extrapolation. This makes it very hard to tell whether you’re losing soil or gaining it. Measuring bulk density (the amount of soil in a given volume, which shows how compacted it might be), or connected porosity (the tiny catacombs created by lifeforms, a crucial measure of soil health), or soil carbon – at scale – is even harder.

So farmers must guess. Partly because they cannot see exactly what the soil needs, many of their inputs – fertilisers, irrigation, deep ploughing – are wasted. Roughly two-thirds of the nitrogen fertiliser they apply, and between 50% and 80% of their phosphorus, is lost. These lost minerals cause algal blooms in rivers, dead zones at sea, costs for water users and global heating. Huge amounts of irrigation water are also wasted. Farmers sometimes “subsoil” their fields – ploughing that is deep and damaging – because they suspect compaction. The suspicion is often wrong.

Our lack of knowledge also inhibits the development of a new agriculture, which may, as Tolly has done, allow farmers to replace chemical augmentation with biological enhancement.

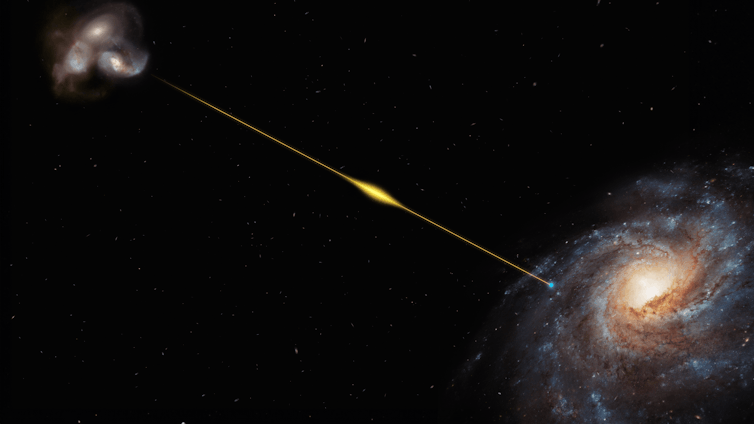

So when I came to write the book, I made a statement so vague that it reads like an admission of defeat: we needed to spend heavily on “an advanced science of the soil”, and use it to deliver a “greener revolution”. While we know almost nothing about the surface of our own planet, billions are spent on the Mars Rover programme, exploring the barren regolith there. What we needed, I argued, is an Earth Rover programme, mapping the world’s agricultural soils at much finer resolution.

I might as well have written “something must be done!” The necessary technologies simply did not exist. I sank into a stygian gloom.

At the same time, Tarje Nissen-Meyer, then a professor of geophysics at the University of Oxford, was grappling with a different challenge. Seismology is the study of waves passing through a solid medium. Thanks to billions from the oil and gas industry, it has become highly sophisticated. Tarje wanted to use this powerful tool for the opposite purpose – ecological improvement. Already, with colleagues, he had deployed seismology to study elephant behaviour in Kenya. Not only was it highly effective, but his team also discovered it could identify animal species walking through the savannah by their signature footfall.

By luck we were both attached, in different ways, to Wolfson College, Oxford, where we met in February 2022. I saw immediately that he was a thoughtful man – a visionary. I suggested a pint in The Magdalen Arms.

I explained my problem, and we talked about the limits of existing technologies. Was seismology being used to study soil, I asked. He’d never heard of it. “I guess it’s not a suitable technology then?” No, he told me, “soil should be a good medium for seismology. In fact, we need to filter out the soil noise when we look at the rocks.” “So if it’s noise, it could be signal?” “Definitely.”

We stared at each other. Time seemed to stall. Could this really be true?

Over the next three days, Tarje conducted a literature search. Nothing came up. I wrote to Prof Simon Jeffery, an eminent soil scientist at Harper Adams University, whose advice I’d found invaluable when researching the book. I set up a Zoom call. He would surely explain that we were barking up the wrong tree.

Simon is usually a reserved man. But when he had finished questioning Tarje, he became quite animated. “All my life I’ve wanted to ‘see’ into the soil,” he said. “Maybe now we can.” I was introduced to a brilliant operations specialist, Katie Bradford, who helped us build an organisation. We set up a non-profit called the Earth Rover Program, to develop what we call “soilsmology”; to build open-source hardware and software cheap enough to be of use to farmers everywhere; and to create, with farmers, a global, self-improving database. This, we hope, might one day incorporate every soil ecosystem: a kind of Human Genome Project for the soil.

We later found that some scientists had in fact sought to apply seismology to soil, but it had not been developed into a programme, partly because the approaches used were not easily scalable.

My role was mostly fixer, finding money and other help. We received $4m (£3m) in start-up money from the Bezos Earth Fund. This may cause some discomfort, but our experience has been entirely positive: the fund has helped us do exactly what we want. We also got a lot of pro-bono help from the law firm Hogan Lovells.

Tarje, now at the University of Exeter, and Simon began assembling their teams. They would need to develop an ultra-high-frequency variant of seismology. A big obstacle was cost. In 2022, suitable sensors cost $10,000 (£7,500) apiece. They managed to repurpose other kit: Tarje found that a geophone developed by a Slovakian experimental music outfitworked just as well, and cost only $100. Now one of our scientists, Jiayao Meng, is developing a sensor for about $10. In time, we should be able to use the accelerometers in mobile phones, reducing the cost to zero. As for generating seismic waves, we get all the signal we need by hitting a small metal plate with a welder’s hammer.

On its first deployment, our team measured the volume of a peat bog that had been studied by scientists for 50 years. After 45 minutes in the field, they produced a preliminary estimate suggesting that previous measurements were out by 20%. Instead of extrapolating the peat depth from point samples, they could see the wavy line where the peat met the subsoil. The implications for estimating carbon stocks are enormous.

We’ve also been able to measure bulk density at a very fine scale; to track soil moisture (as part of a wider team); to start building the AI and machine learning tools we need; and to see the varying impacts of different agricultural crops and treatments. Next we’ll work on measuring connected porosity, soil texture and soil carbon; scaling up to the hectare level and beyond; and on testing the use of phones as seismometers. We now have further funding, from the UBS Optimus Foundation, hubs on three continents and a big international team.

Eventually, we hope, any farmer anywhere, rich or poor, will be able to get an almost instant readout from their soil. As more people use the tools, building the global database, we hope these readouts will translate into immediate useful advice. The tools should also revolutionise soil protection: the EU has issued a soil-monitoring law, but how can it be implemented? Farmers are paid for their contributions “to improve soil health and soil resilience”, but what this means in practice is ticking a box on a subsidy form: there’s no sensible way of checking.

We’re not replacing the great work of other soil scientists but, developing our methods alongside theirs, we believe we can fill part of the massive knowledge gap. As one of the farmers we’re working with, Roddy Hall, remarks, the Earth Rover Program could “take the guesswork out of farming”. One day it might help everyone arrive at that happy point: high yields with low impacts. Seismology promises to shake things up.

http://www.monbiot.com

ooOOoo

George Monbiot puts his finger precisely on the point of his article: “While we know almost nothing about the surface of our own planet, billions are spent on the Mars Rover programme.“